Proudly presenting Sirona’s take on generative AI in healthcare – aiming to clarify the opportunities and implementation strategies required for leaders to productively wield its full potential within healthcare.

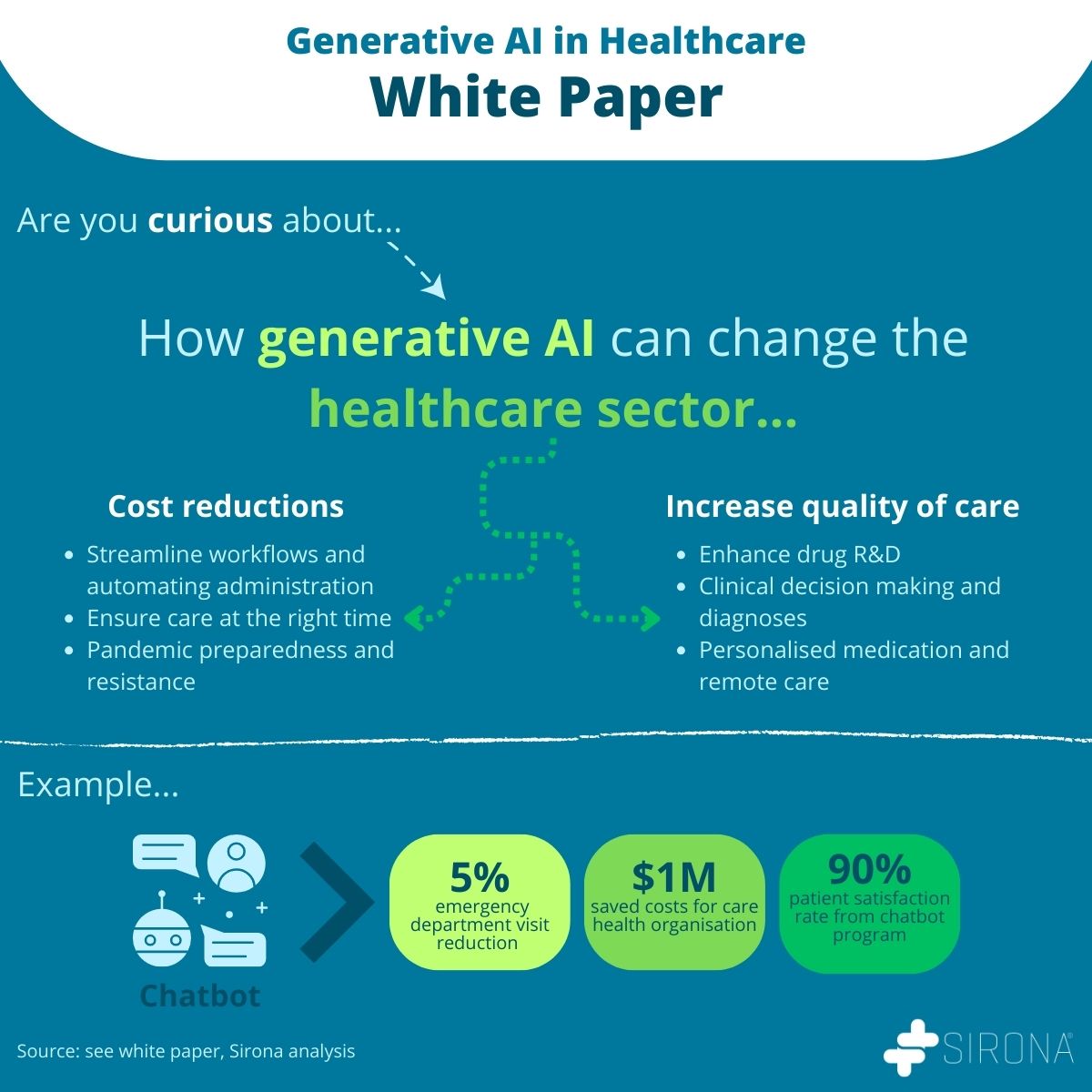

Having passed the U.S. Medical Licensing Exam without clinical input and with the ability to score higher than real physicians in quality and empathy answering medical questions – many are made to believe generative AI will replace medical staff. But generative AI can still be unreliable which could have mortal consequences in a sector such as healthcare. It is Sirona’s opinion that generative AI cannot be left to fully automate tasks but should rather act as a well-read co-pilot for medical staff across the board. We have identified 6 subcategories where the greatest impact could take place.

This requires extensive implementation strategies; from establishing a responsible AI to reorganizing organizations fully to make sure all individuals are empowered by generative AI.

The future is difficult to extrapolate, but as LLM (large language models) such as ChatGPT base their output on previous data, like us, they are likely prone to errors to a similar extent no matter how advanced. Causing their future role within healthcare to be ambiguous, and could culminate in philosophy such as: can a machine be held responsible for the death of another human being?

Have you read the white paper and want to discuss it further with us? Please contact–William Karlsson Lille Senior Data Scientist at Sirona.